Problem

The Bird Banding Lab is a subagency of the United States Geological Survey, the office is tasked with tracking migratory birds across North America.

Recently the agency has seen a decline in user satisfaction, and data integrity due to usability issues with the agencies database software BANDIT. Frustrated users have begun using shadow systems to correct errors they feel the system is experiencing. This has led to a fragmented system that lacks uniformity of data, and as a result a reduction in the integrity of this data.

To address these issues an update of the software has been funded. To help prevent the BBL from experiencing similar issues after this update a team of 3 including myself worked along side the Bird Banding Lab to establish recommendations for how the software should be altered to best increase usability among users.

Process

Research into the software took place over the course of 4 months. Researchers had no prior use with the software, which helped prevent influencing participants during user test. Researchers were split into four groups that focused on 4 different types of banders, this was done to ensure a diverse participant pool. Since banders are located across both the United States and Canada we implemented the use of Zoom, a web conference software, to assist with the research.

There were two rounds of research for this project. The preliminary round of research was a set of contextual inquiries, a process in which we observed users interacting with the software in a way that was familiar to them. During this process users were asked to show us a task they did frequently. Users were encouraged to speak openly during this session, and researchers also asked follow up questions about how task had been completed. Using information collected during this initial round we were able to create a user matrix to quickly compare users behavior.

In the task analysis portion of our research, four users were tested; one was tested in-person at the University’s user research lab using eye-tracking software. The other three participants tested remotely using, a teleconference and screen-sharing software. All of the sessions were recorded. Each user was asked to complete 5 detailed task within BANDIT to better understand the current user journey.

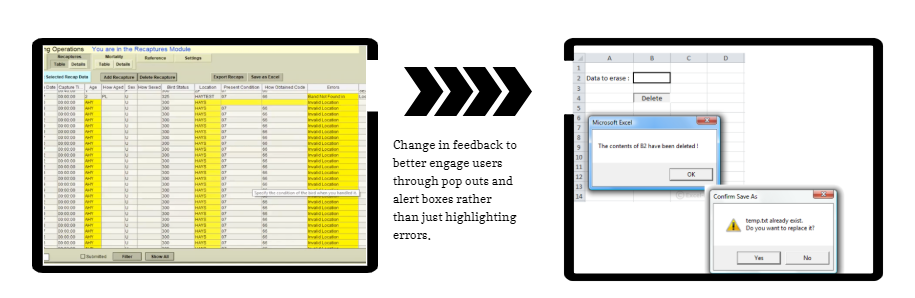

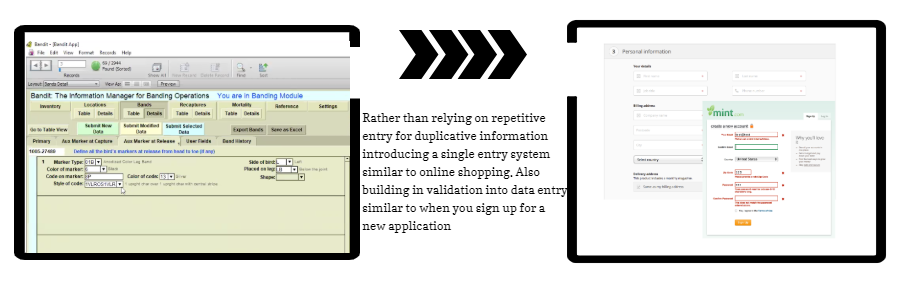

Testing revealed several areas of concerns for users, such as lack of feedback, readability, and findability. Users were experiencing content overload when trying to interact with the software. Many of the issues banders ran into we found to be issues that had resolutions within the program however due to its complexity was out of reach for many users.

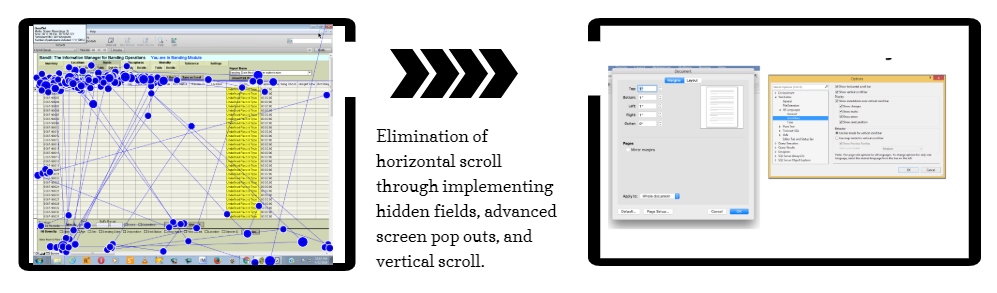

Eye tracking software showing the lack of findability within the software's use of a horizontal scroll

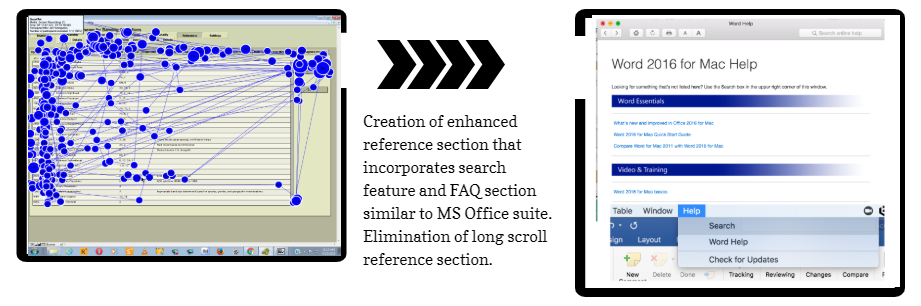

Lack of findability within the reference list, as shown by eye tracking

Solution

Based off of the findings and recommendations provided, there are both short term and long term fixes the BBL can put into place to improve the usability of Bandit. Short term fixes include identifying target users for the batch upload, providing an FAQ section, increasing advertisement of print and electronic reference manuals, and continued encouragement of feedback from users. We also encourage promotion of features and development of standardize training materials for users. These solutions leverage the strengths that Bandit already has with low cost and effort; and they will help mitigate frustrations with the program until a more permanent redesign can take place.

In the long term we recommend implementing such as an enhanced feedback, data entry, and reference section. We also recommend implementing a web-based application so banders are able to access Bandit from any system they are currently using. This will also circumvent many of the technology barriers that users experience, like the operating system limitations of Bandit. Finally we recommend incorporating an error feedback report option, similar to the error message one receives when an application, such as Microsoft Word, shuts down unexpectedly. This would allow BBL to better capture what errors users are seeing in real time and be able to address those errors in a timelier manner.

Feedback Recommendations

Horizontal Scroll Recommendation

Redundancy Recommendation